Improving Online Chatting

The article Two-Party Threaded Chat by Peter Arrenbrecht addresses the problem of multiple threads of discussion within a single conversation. Without face-to-face contact, the threshold for interruption is much lower and answers will not always neatly line up under their questions. A conversation may have many of these “threads”, though individual ones are usually quite short-lived. Chat clients are currently limited by their purely serial approach to inserting text into a conversation.

The solution proposed in the article is quite good, and the discussion below only expands slightly on those concepts, while illustrating them with fake screenshots in iChat-style. iChat’s approach of using talk bubbles from opposing sides is somewhat easier to follow than the text-only examples provided in the original article and even the multi-thread view in the demo (which is likely to be a bit much for the average user).

Insertion Points

The problem boils down to insertion points for comments. Chat clients today always place new text at the bottom of the conversation, whether or not that is the most appropriate insertion point. The proposed extension is to give the user control over the insertion point and also to automatically propose the best insertion point, if possible.

The Classic Case

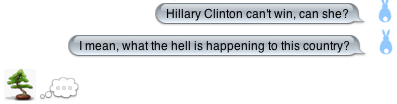

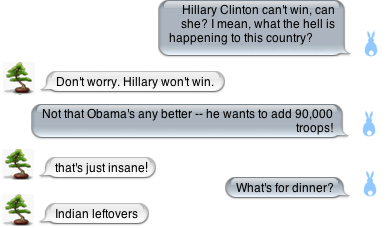

In the classic case, one party asks a question or makes a comment. The other party types a message and sends it, inserting it at the end of the conversation. If the first party did not send any messages in the meantime, then the question and answer are lined up correctly. The example below shows the insertion point as displayed in iChat as one party types (l.) and the inserted message (r.).

A Simple Interruption

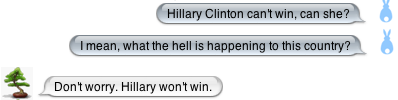

Whenever a message arrives from one party while the other party is still typing, that counts as an interruption and there are now two possible insertion points: the point in the conversation at which the receiver started typing and the end of the conversation. The user should be able to switch between these insertion points (either with a mouse click or a key combination to toggle between them), but the client should default to inserting into the original location. Once the user selects a point, they both disappear and the text appears where inserted (in the original insertion point in the example below).

As you can see from the example, there can only ever be two insertion points because the user can only be typing one message and can only be interrupted once. As soon as the user sends a message, the “thread” is closed and the client manages a single insertion point again.[1]

Of course, clients that do not support this feature will continue to display conversations from newer clients serially, as they do currently.

Targeted Insertions

Peter mentions being able to specifically reference a comment all the way at the end of his proposal (under “Implementation”). I (and several people with whom I chat) have been using the @-symbol for this purpose for years, using the following syntax:

@[label]: [comment]The label refers to a unique word in the comment from the other party to which the new comment refers. It’s essentially poor-man’s threading for the dumb chat clients available today—but it’s already quite effective even without client support. When a new client sees such a targeted insertion, it should seek backwards through the conversation to find that word, then insert the new comment immediately after the sentence or comment that contained it. The exact algorithm can be refined, but let’s take a look at a simple example.

An Example

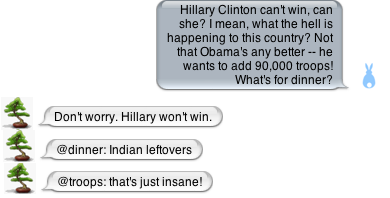

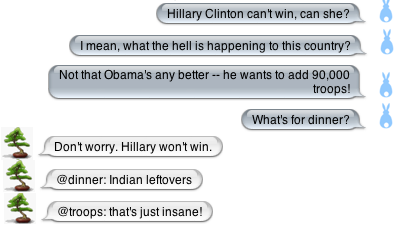

Let’s take a look at a conversation initiated by someone who makes several points at once, piling up questions for the other party to answer. The other party then answers the questions in order, using the @-symbol to let the other party know which answers correspond to which questions. In a modern chat client, this looks like one of the screenshots below (all comments in one block on the left; comments separate on the right):

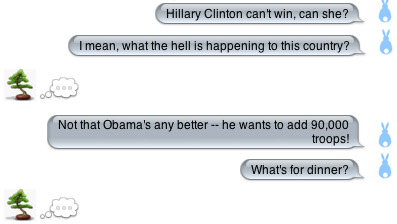

Let’s proceed with the example on the left, showing the first response from the second party below:

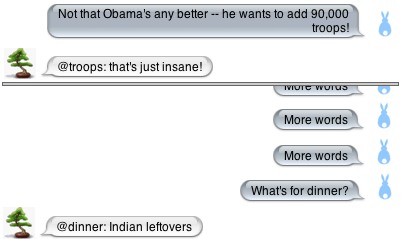

Since the response is not targeted, it just shows up below the whole block of text. The next response is targeted—using @dinner—and so causes the sentence with the word “dinner” in it (as well as everything following it, which in this case is nothing) to be split off from the main text in both clients. That response is shown below:

The third response targets a chunk out of the original text—using @troops—so that sentence is extracted and the first response moves up to remain under all the remaining untargeted text. That response is shown below:

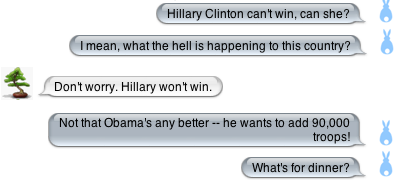

Hiding targets

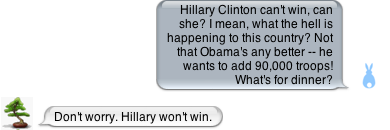

A client that understands targets in the text (and adjusts the display accordingly) could also just remove those targets from the displayed text, as shown below.

The client now looks as if the conversation had happened in the correct order, all with the help of targeting.

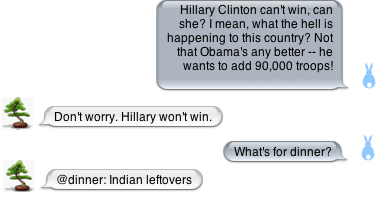

Off-screen Targets

All of the targets in the example above are still on-screen, so the chat client doesn’t have to do anything other than move the blobs of text around. However, if a target has already scrolled off-screen, the client should display that part of the conversation again. One way to do this is to split the window to show the target as well as the end of the conversation, as shown below.

Targeted Insertion with Splitter

Targeted Insertion with Splitter

In this case, both regions are scrollable, showing different locations within the same conversation. Since these targeted discussion threads rarely stay open for more than a few comments, the client doesn’t need to offer any way of switching between the splitter areas. In order to continue the top part of the conversation in the example above, a user could either re-use the @troops label (in which case the comment is inserted after all other comments that used that label) or target a word in that comment, like @insane. If no label is used, the comment is inserted at the end of the conversation, as usual.

This approach keeps the number of things a user has to know to a minimum, letting all new functionality be controlled by the use of labels.