Entity Framework: Be Prepared

Published by marco on

This article was originally published on the Encodo Blogs. Browse on over to see more!

In August of 2008, Microsoft released the first service pack (SP1) for Visual Studio 2008. It included the first version (1.0) of Microsoft’s generalized ORM, the Entity Framework. We at Encodo were quite interested as we’ve had a lot of experience with ORMs, having worked on several of them over the years. The first was a framework written in Delphi Pascal that included a sophisticated ORM with support for multiple back-ends (Sql Server, SQLAnywhere and others). In between, we used Hibernate for several projects in Java, but moved on quickly enough.[1] Most recently, we’ve developed Quino in C# and .NET, with which we’ve developed quite a few WinForms and web projects. Though we’re very happy with Quino, we’re also quite interested in the sophisticated integration with LINQ and multiple database back-ends offered by the Entity Framework. Given that, two of our more recent projects are being written with the Entity Framework, keeping an eye out for how we could integrate the experience with the advantages of Quino.[2]

What follows are first impressions acquired while building the data layer for one of our projects. The database model has about 50 tables, is highly normalized and is pretty straightforward with auto-incremented integer primary keys everywhere, and single-field foreign keys and constraints as expected. Cascaded deletes are set for many tables, but there are no views, triggers or stored procedures (yet).

Eventually, EF will map your model and the runtime performs admirably (so far). However, designing that model is not without its quirks:

- Be prepared to have minor changes in the database result in a dozen errors on the mapping side.

- Be prepared for error messages so cryptic, you’ll think the C++ template compiler programmers had some free time on their hands.

- Be prepared to edit XML by hand when the designer abandons you; you’ll sometimes have to delete swathes of your XML in order to get the designer to open again.

- Be prepared to wait quite a while for the designer to refresh itself once the model has gotten larger.

- Be prepared to regularly restart Visual Studio 2008 once your model has gotten bigger; updating the model from the database even for a minor change either takes a wholly unacceptable amount of time or sends the IDE into limbo; either way, you’re going to have to restart it.

To be fair, this is a 1.0 release; it is to be expected that there are some wrinkles to iron out. However, one of the wrinkles is that a model with 50 tables is considered “large”.

Large Model Support

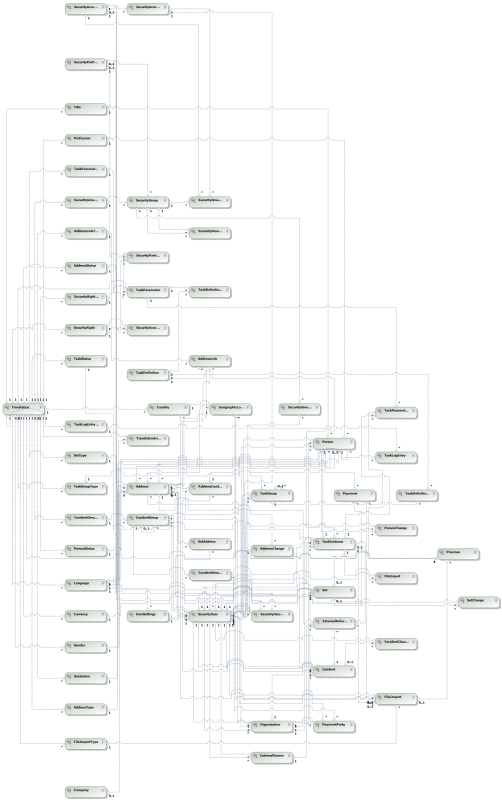

With 50 tables and the designer slowing down, you’re forced to at least consider options for splitting the model. The graphic below shows the model for our application:

Mid-sized Entity Model

Mid-sized Entity Model

There exists official support for splitting the model into logical modules, but it’s just a bit complex; that is, you have to significantly change the generated files in order to get it to work and there is no design-time support whatsoever for indicating which entities belong to which modules. The blog posts by a member of the ADO.NET team called Working With Large Models In Entity Framework (Part 1 and Part 2) offer instructions for how to do this, but you’ll have to satisfy one of the following conditions:

- You don’t understand how bad it is to alter generated code because you’ll just have to do it again when the database model has changed.

- You’re new to this whole programming thing and aren’t sufficiently terrified by a runtime-only, basically unsupported feature in a version 1.0 framework

- Development with a single model has gotten so painful that you have to bite the bullet and go for it.

- Condition (3) plus you have enough money and/or time in the budget to build a toolset that applies your modularization as a separate build-step after EF has generated its classes.[3]

The low-level, runtime-only solution offered by the ADO.NET team ostensibly works, though it probably isn’t very well-tested at all. Designer and better runtime-integration would be key in supporting larger models, but the comments at the second blog post indicate that designer support likely won’t make version 2 of the Entity Framework. This is a shocking admission, as it means that EF won’t scale on the development side for at least two more versions.

The Designer

The designer is probably the weakest element of the Entity Framework; it is quite slow and requires a lot of work with the right mouse-button.

- Once you’ve got a lot of entities, you’ll want to collapse all of them in the diagram and redo the automatic layout so that you can see your model better. This takes much longer than you would think it should. Once you’ve collapsed a class, however, error messages no longer jump to the diagram, so you’ll have to re-expand them all in order to figure out in which entity an error occurred.

- “Show in Designer” doesn’t work with collapsed classes; even when classes are expanded it’s extremely difficult to determine which class is selected because the selection rectangle gets lost in the tangle of lines that indicate relationships.

- Simply selecting a navigation property and pressing Delete does nothing; you have to select each property individually, right-click and then Select Association. The association is highlighted, albeit very faintly, after which you can press Delete to get rid of it.

If you’re right in the middle of a desperate action to avoid reverting to the last version of your model from source control, you’ll be pleased to discover that, sometimes, Visual Studio will prevent you from opening the model, either in visual- or XML-editing mode. Neither a double-click in the tree nor explicitly selecting “Open” from the shortcut menu will open the file. The only thing for it is to re-open the solution, but at least you don’t lose any changes.

Synchronizing with the Database

The biggest time-sink in EF is the questionable synchronization with the database. Often, you will be required to intervene and “help” EF figure out how to synchronize—usually by deleting chunks of XML and letting it re-create them.

- You may think that removing a table from the mapping will let you re-import it in its entirety from the database. This is not the case; be prepared to remove the last traces of that table name from the XML before you can re-import it.

- Be prepared for duplicated properties when the mapper doesn’t recognize a changed property and establishes the close sibling “property1”.

- Be prepared for EF to get confused when you’ve made changes; the bigger your model is, the more likely this is to happen and the less helpful the error messages. They will refer to errors on line numbers 6345 and 9124 and half the file, when opened as XML, will be underlined in wavy blue to indicate a compile error.

- Be prepared to delete associations between entities that have changed on the database (e.g. have become nullable) because the EF updater does not notice the change and update the relationship type from

1 (One)to0..1 (Zero or One). - If you have objects in the database that do not match the constraints of the database model, they cannot even be loaded by EF. (e.g. if as above, you’ve made a property nullable in the database, but the EF model still thinks it cannot be nullable).

Here’s a development note written after making minor changes to the database:

“I added a couple of relationships between existing tables and there were suddenly 17 compile errors. I desperately tried to delete those relationships from the editor, to no avail. I opened it as XML and started deleting the affected sections in the hopes that I would be able to compile again and re-sync with the database. After a few edits, the editor would no longer open and the list of errors was getting longer as the infection spread; I would have to cut out the cancer. The cancer, in this case, was all of the classes involved in the new relationships. Luckily, they were mostly quite small and mostly used the identifiers from the database.[4] Once the model compiled again (the code did not build because it depended on generated code that was no longer generated), I could open the editor and re-sync with the database. Now it worked and had no more problems. All this without touching the database, which places the blame squarely on EF and its tendency to get confused.”

As you can imagine, adventures like these can take quite a bit of time and break up the development flow considerably.

Initalization of Dates

The problem with dates all starts with this error message:

Be prepared to guess which of your several DateTime fields is causing the error because the error message doesn’t mention the field name. Or the class name either, if you’ve had the audacity to add several different types of objects—or, God forbid, a whole tree of objects—before calling SaveChanges().

This error may come as a surprise because you’ve actually set default values in the database for all non-nullable date-time fields. Unfortunately, the EF schema reader does not synchronize non-scalar default values, so the default value of getdate() set in the database is not automatically included in the model. Since the entity model doesn’t know that the database has a default value, but it does know that the field cannot be null, it requires a value. If you don’t provide a value, the mapper automatically assigns DateTime.MinValue. The database does not accept this value, so we have to set it ourselves, even though we’ve already set the desired default on the database.

To add insult to injury, the designer does not allow non-scalar values (e.g. you can’t set DateTime.Now in the property editor), so you have to set non-scalar defaults in the constructors that you’ll declare by hand in the partial classes for all EF objects with dates[5].

In order to figure out which date-time is causing a problem once you think you’ve set them all, your best bet is to debug the Microsoft sources so you can see where ADO.NET is throwing the SqlClientException. The SQL Profiler is unfortunately no use because the validation errors occur before the command is sent to the database. To keep things interesting, the Entity Framework sources are not available yet.

Using Transactions

The documentation recommends using ScopeTransactions, which use the DTS (Distributed Transactions Services). If the database is running locally, you should have no troubles; at the most, you’ll have to start the DTC[6] services. If the database is on a remote server, then you’ll need to do the following:

- Enable remote network access for the MSDTC by opening the firewall for that application and opening access in that application itself; see Troubleshooting Problems with MSDTC for more information. The quickest solution is to simply use non-authenticated communication for development servers on a closed network.

- If that doesn’t work, then you’ll have to dig deeper into your firewall problems. How do you know when you’re an enterprise developer? When you have to read through a manual like the following: How to troubleshoot MS DTC firewall issues.

Any troubles you may experience with the DTC are unrelated to EF development; they’re just the pain of working with highly-integrated and security-aware software. That’s not to say that the experience is pleasant when something is mis-configured, but that I am reserving judgment until a later point in time.

Common Error Messages

The following section includes solutions for specific errors that crop up more often during EF model development.

Mapping Fragments…

Error 1 Error 3007: Problem in Mapping Fragments starting at lines 1383, 1617: Non-Primary-Key column(s) [ColumnName] are being mapped in both fragments to different conceptual side properties − data inconsistency is possible because the corresponding conceptual side properties can be independently modified.

You have most likely mapped the property identified by ColumnName as both a scalar and navigational property. This usually happens in the following situation:

- Add a foreign-key property on the database (do not create a constraint).

- Update the entity model from the database; a scalar property is added to your model.

- Add the foreign-key constraint to the database.

- Update the entity model from the database; a naviagational property is added to your model, but the scalar property is not removed.

To fix the conflict, simply remove the scalar property manually.

Cardinality Constraints…

You have most likely created a cascading relationship in the database and the EF editor has failed to properly update the model. It seems that there is no way to determine from the designer whether or not an association has delete or update rules. According to the blog post, Cascade delete in Entity Framework, the designer sometimes fails to update the association in both the physical and entity mappings in the XML file, so you have to add the rule by hand. See the article for instructions.

Conclusion

The database-design phase is more difficult than it should be, but it is navigable. You end up with a very usable, generated set of classes which nicely integrate with data-binding on controls. We will soldier on and bring news of our experiences on the runtime front.

timeCreated/timeModified fields, you’ve got a lot of work ahead of you.↩DTS—the Distributed Transaction Services. However, the actual Windows service is called the DTC—the Distributed Transaction Controller.↩