OMG, really? AI stuff again?

Published by marco on

Drag-Drop Image Conversion by Simon Willison is a gist that contains the conversation that Simon Willison had with Ghat-GPT to build a drag-&-drop image converter.

First of all, he started working on it on April 1st, but it’s hard to believe that he’s pranking—he doesn’t seem the type—so I’ll give him the benefit of the doubt. Assuming that this is real, it’s impressive that it can turn those prompts into a working application.

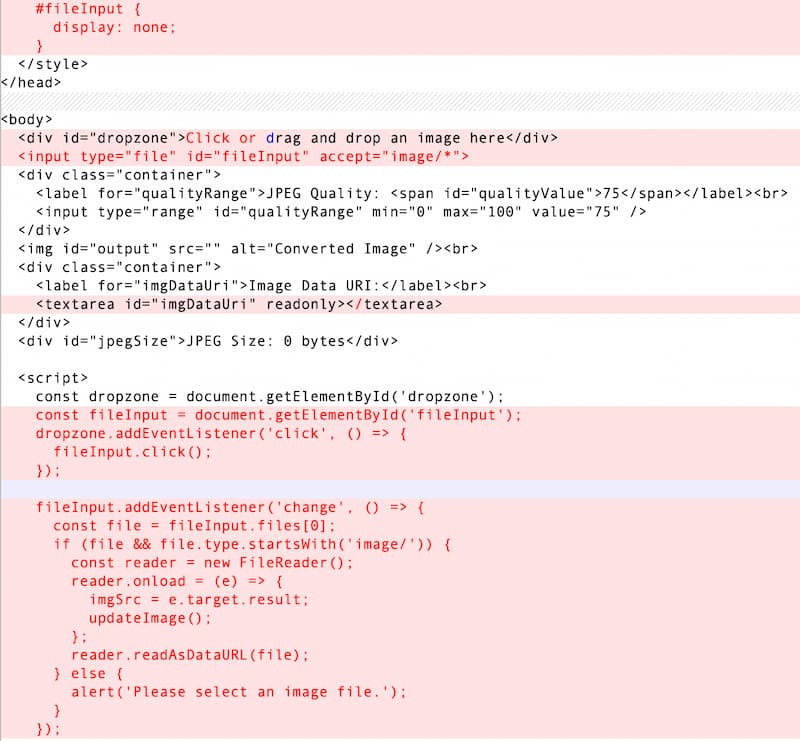

Although … did it? If you copy/paste any of its examples into an HTML page, none of them does what it says they do.

The drag-&-drop doesn’t work. The output is just most of the code repeated in the text box.

It’s just impressive-looking and much closer than just random code, but they don’t work. If you don’t know how to program, can you fix it? Of course not.

The fixes Willison ended up making were very non-cosmetic.

Diff showing non-cosmetic changes

Diff showing non-cosmetic changes

Still, let’s pretend that they had worked or gotten much closer to working. What if everyone could build code like this?

Hey, maybe it brings back the world of experimentation we had back in the early days of the web, when everyone was writing HTML directly. Maybe these simple things will start showing up more again, especially when a machine writes them.

I wonder, though, where it got this code from. Has it really built it mostly on its own? Or would we find a remarkably similar version somewhere in the vast input data that was its corpus?

Another question is: what are the implications if we would start building software this way? Do we just dump our notions of architecture and a common coding style? There are a lot of considerations about maintainability and consistency and onboarding and so on that go into our software today. Do we just throw all of that overboard and move to a patchwork of one-off components?

If we’re being honest, isn’t this how a lot of programmers are already building code for their employers? Just copy/pasting stuff together and crossing their fingers?

How to use AI to do practical stuff: A new guide by Ethan Mollick (One Useful Thing)

“You often need to have a lot of ideas to have good ideas. Not everyone is good at generating lots of ideas, but AI is very good at volume. Will all these ideas be good or even sane? Of course not. But they can spark further thinking on your part.”

I suppose this beats having friends or coworkers. Apparently the film “Her” was utopic, not dystopic.

“Summarize texts. I have pasted in numerous complex academic articles and asked it to summarize the results, and it does a good job!”

How the hell are you in a position to judge? You said before that it lies all the time, that it has no mechanism for admitting defeat because that doesn’t exist. It’s building text. It’s always successful. There’s no meaning to get wrong. It’s like reading tea leaves. The cup doesn’t know how to set up the leaves. The meaning is inferred solely by the reader.

If you don’t know what the paper is about, and you know the reputation of your tool to just make shit up, how can you possibly even think you can judge whether the summary it produced is reprentative?

“If you don’t check for hallucinations, it is possible that you could be taught something inaccurate. Use the AI as a jumping-off point for your own research, not as the final authority on anything. Also, if it isn’t connected to the internet, it will make stuff up.”

Hahahahaha sure. That’s exactly how a lazy, conspiracy-obsessed society treats technology and information. This guide actually applies to using the Internet in general, but almost nobody’s ever followed it. People just inhale information, with the only vetting process being “am I being entertained?”

Also, this is exactly the lesson he ignored above when he claimed that the AI did a good job of summarizing complex academic papers.

Schillace Laws of Semantic AI (Microsoft Learn)

“Don’t write code if the model can do it; the model will get better, but the code won’t.”

So treat the prompt like a high level language that targets a compiler that fabricates and whose workings we don’t understand. Interesting, so maybe just feed your requirements directly into the machine and hope for the best? At some point, it will come up with something that actually functions?

The code won’t get better on its own, but neither will it get worse. It will continue to do what it says on the tin. We may discover more negative ramifications, but what the code does will not change. The quality of the code produced by a prompt—or series of prompts—will change, but not necessarily only for the better, which is being strongly implied by this rule.

“Uncertainty is an exception throw. Because we are trading precision for leverage, we need to lean on interaction with the user when the model is uncertain about intent. Thus, when we have a nested set of prompts in a program, and one of them is uncertain in its result (“One possible way…”) the correct thing to do is the equivalent of an “exception throw” − propagate that uncertainty up the stack until a level that can either clarify or interact with the user.”

Understandable, but it sounds tedious and fraught. It’s getting farther from treating coding as an engineering discipline. Maybe something comes out of it—maybe it’s how everyone will be coding in ten years!—but it feels very wooey and very hypey right now. I can’t tell the difference between this technology and an actual scam, except that this technology kind of looks like it does something useful. It reminds me of a scam in some cities: you have people who pose as public-transportation workers who will sell you tickets. The tickets actually work. But they’re not valid for more than just the smallest zone. You’ll pay for five or six zones, but you can’t actually travel there. AI reminds me of that, so far.